Initiated by Seeed Studio, NVIDIA, and Hugging Face, we’ve successfully hosted two Embodied AI Hackathons during the past October in 2025 – across Shenzhen, China + Mountain View, U.S. Over 700 developers registered, and more than 30 teams came together to build and contribute to this open-source robotics playground!

The main topic of the whole event is to design Home Task and Cooking Robots and bring them to life in the HOME scene. All contestants gather together and are divided into 15 teams for both sites. They can get hands-on with real robot kits – Hugging Face LeRobot SO-ARM101 and FashionStar StarAI robot arms, powered by the NVIDIA Jetson Thor platform together with the latest JetPack 7 SDK, NVIDIA Isaac Sim, and LeRobot framework by Hugging Face. Hugging Face is also the hub for accessing models, datasets, and tools in PyTorch, giving contestants everything needed to create real-world robotics breakthroughs.

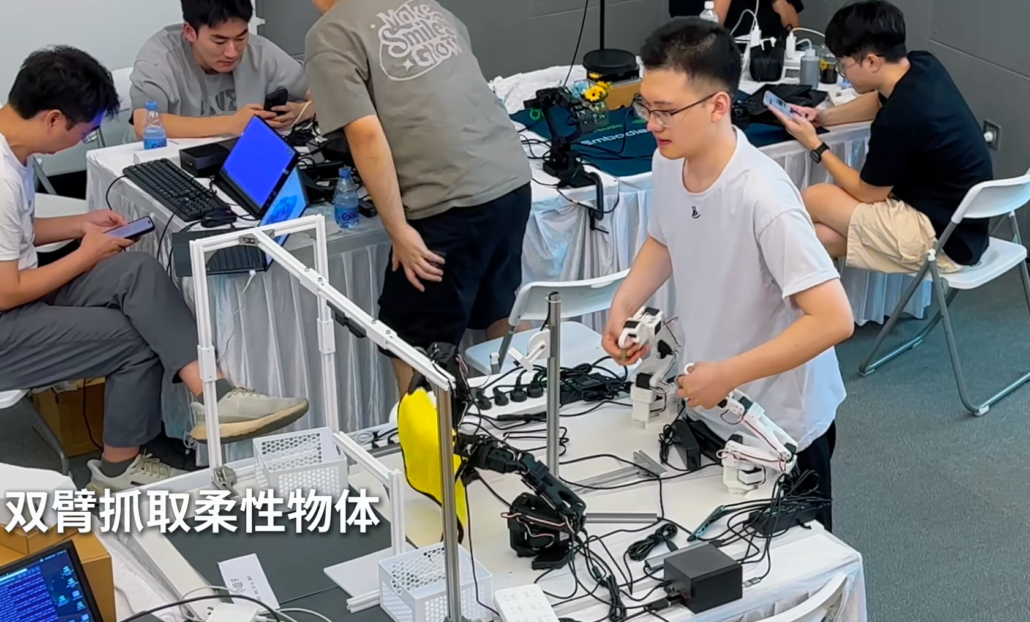

We’ve seen so many amazing ideas and well-done demos during this event, including dual-arm operation, long-sequential tasks completion, high generalization responses, depth sensing, mobile manipulation with LeKiwi/XLeRobot, and more!

Every team could get a full dev experience on:

- Robot hardware assembly and calibration to get ready

- Collecting datasets over 150 episodes via teleoperation/Isaac simulation (more good data, better robot behavior)

- Train the model, which may include ACT/Diffusion Policy, VLA models like Isaac GR00T N1.5, Hugging Face SmolVLA with imitation learning and reinforcement learning method

- Finally, deploy the policies on the NVIDIA Jetson Thor developer kit for accelerated inference, to build affordable, capable robots that can take on everyday challenges, for example: pick and place, folding cloth, etc.

You can literally get hands-on with the whole pipeline of this hackathon by following our wiki step-by-step: [NEW] Fine-tune GR00T N1.5 for LeRobot SO-101 Arm and Deploy on Jetson AGX Thor.

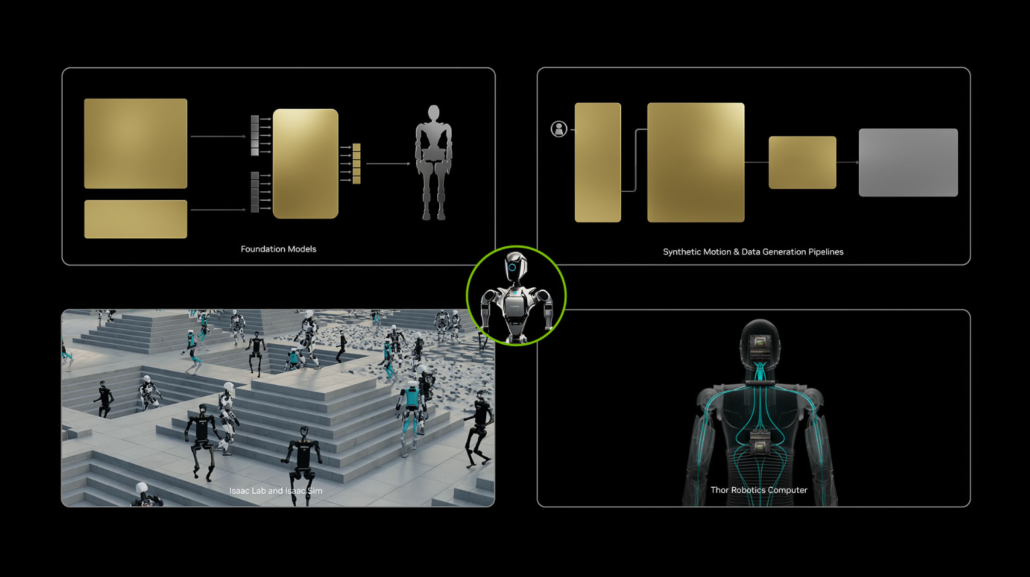

Completing this journey will give you a practical, behind-the-scenes look at the powerful three-computer philosophy from NVIDIA behind modern robotics: get the massive computational power to train robot foundation models, such as the latest NVIDIA Isaac GR00T model for cognition and control, simulation frameworks powered by NVIDIA Omniverse and Cosmos; data pipelines for generating Synthetic Data and environments; and finally, the NVIDIA Jetson AGX Thor serves as the robot’s brain, delivering efficient, real-time inference for intelligent physical interaction.

Here are all the other necessary tech resources, which every contestant should check before joining this event:

- Get started with SO-ARM101

- Get Started with Fashion Star Star AI Robot Arm

- ROS2 Moveit for Star AI Robot Arm

- SO-Arm101 Reinforcement Learning

- Wiki to import robot arm into NVIDIA Issac Sim

- Paper – Diffusion Policy

- Video for Diffusion Policy Q&A

- Paper – ACT(Action Chunking with Transformers)

- Post-Training Isaac GR00T N1.5 for SO-Arm101

- Get started with Lightwheel LeIsaac

- XLeRobot Getting Started

In case you missed any one of the hackathons onsite, here are the highlights showing what happened during this wonderful journey:

Winning Project & Demo Collections

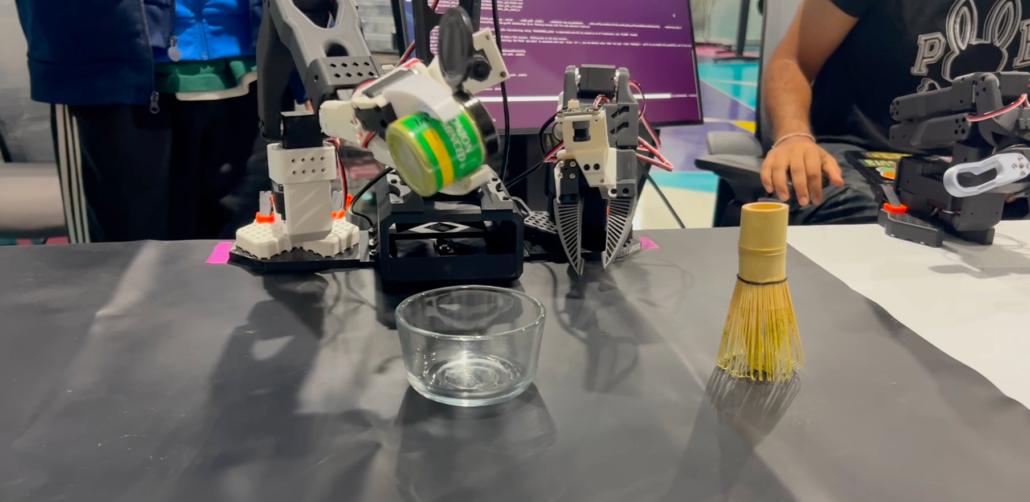

1. Champion team in U.S session: Matcha making by UIUC SIGRobotics team

They’ve built bimanual robot arms powered by the XLeRobot system, trained an NVIDIA GR00T N1.5 model via NVIDIA Brev, and deployed inference on Jetson Thor. The robot’s task is to pour out the matcha powder, add water to the bowl, and then mix together to make matcha tea.

The main challenge during dataset preparation for GR00T is its lack of memory, meaning it cannot recall past actions, which can lead to confusion during repeated or symmetrical tasks.

To address this, they ensured that each task had a distinct start and end state, or modified the trajectory to make the states asymmetric and prevent overlap. For example, during the pouring water subtask in the matcha-making process, they deliberately dropped the water container at the end to signal task completion. Similarly, they differentiated the matcha pouring task by visually marking the bowl as either empty or full. For the final whisking task, they made sure the whisk moved clockwise inside the bowl, and ended recordings abruptly to avoid confusion between stopping or continuing the motion.

Resources to learn more about this project: Hackster | GitHub

2. First Runner-up team in the U.S session: Sprinkle robot

Teaming up with Bruce Kim, Joon Kim, and Peiqing Xia, the group built Sprinkle Robot — a continuous, multi-step robotic system that performs end-to-end actions: picking up a sugar container, sprinkling evenly across the plate, rotating the plate using a custom button 🎃, finishing the other half, and finally placing the container upright. The result is a neatly automated sugar-sprinkling workflow for every cookie.

Their system was powered by a fine-tuned SmolVLA trained on 170 episodes and deployed on NVIDIA Jetson Thor, with successful migration even on the smaller Jetson Orin Nano. A notable design choice was breaking the long-horizon task into 4 focused models — grab, tilt, press, and place. By running each model with its own activation timing and sequence, the team gained more reliable control over each sub-action. This modular approach made the overall behavior smoother and easier to coordinate across the entire task.

Learn more about this project.

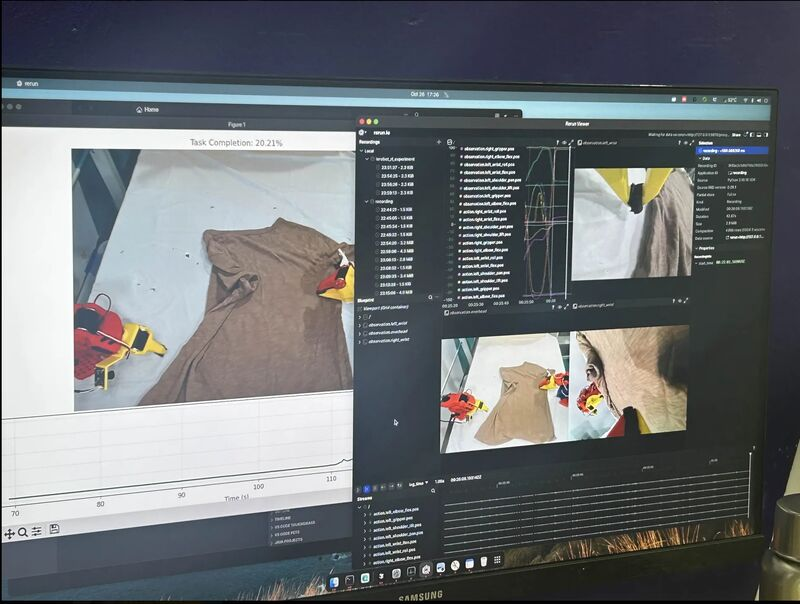

3. Second Runner-up team in the U.S session: Cloth Folding Robot

This project aimed to leverage dual-robot arms by fine-tuning Vision-Language-Action (VLA) models specifically for the complex task of folding a T-shirt. We wanted to explore how quickly a compelling behavior could be developed and deployed.

In just a day and a half, they collected manual demonstrations, experimented with Isaac Sim for scenario generation, and fine-tuned an ACT model. They even advanced to creating a learned reward function for reinforcement learning, enabling the robot to recover from unseen errors. Ultimately, deploying this pipeline on the SO-ARM101 shows the iterative speed these tools provide for rapid prototyping and testing.

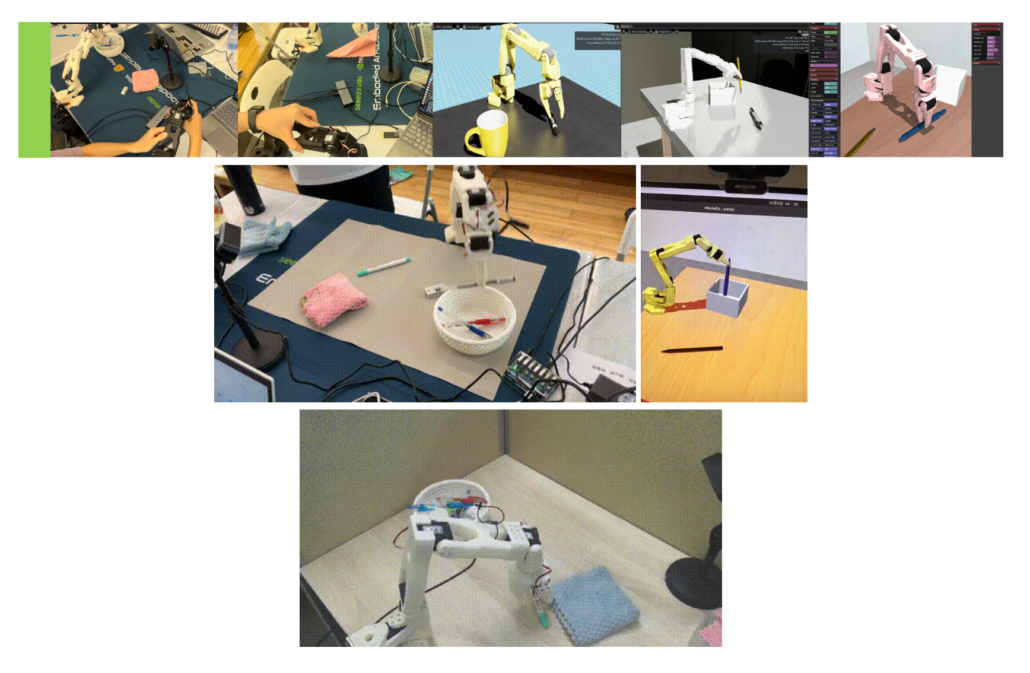

4. Champion team in the China session: Pick&Place with high generalization

This project tackled the complex challenge of enabling a robot to perform a long-horizon desktop tidying task. Using the LeRobot framework, the team fine-tuned NVIDIA’s GR00T N1.5 model and deployed it on SO-ARM101 with a Jetson Thor developer kit. The goal was to have the robot sequentially gather pens and erasers into a container and then wipe the desk clean.

To achieve robust performance, the model was trained on a diverse, multi-source dataset of 300 episodes. This dataset blended real-world teleoperated data (90%) with simulated data (10%), all enhanced with domain randomization to vary object positions and types. The resulting policy demonstrated stable, precise, and swift movements, successfully showcasing effective generalization for the task.

Learn more about the project.

5. First Runner-up team in the China session: Soft textiles folding

This project executes a complete towel-folding routine. The challenge involved programming a dual-arm SO-ARM101 robot to perform a long-horizon sequence: picking a towel from the table, spreading it flat, and finally folding it.

The team built a robust perception and control system. Using an RGB camera for visual input, the robot identified the towel and key locations, also integrated the Hugging Face LeRobot framework for policy learning, and then the model was deployed on the NVIDIA Jetson Thor, which served as the main computational brain for real-time accelerated inference. The whole pipeline enables the robot to perceive its environment and execute this complex, multi-step task.

6. Second Runner-up team in the China session: Mate XLeRobot

The whole team – Ryan, Isaac, Qi, KAHO, and Bubbles redesigned and optimized the XLeRobot dual-arms, making it a setup that feels more human-like to control and far more versatile in real use. They achieved this by flipping both arms into a symmetrical, human-oriented layout, making VR control feel like moving your own hands. Motion planning also became more intuitive thanks to smoother inverse kinematics and an improved range of motion.

One of the biggest upgrades was a vertical lift-rail system built using MakerMods sliders, allowing both arms to reach below desks and above shelves for everyday manipulation tasks.

The final demo showcased dual-robot collaboration: Make XLeRobot handled hand-to-hand object passing, while the base-spec XLeRobot ran a VLA (Vision-Language-Action) model to autonomously pick up and store objects. Together, the two robots highlighted how coordinated embodied AI systems can handle complex, multi-step tasks with precision and fluidity.

Learn more about this project.

A Special Thank You Note to Our Developer Ecosystem Friends

Our sincere thanks to our amazing mentors and judges from NVIDIA, Hugging Face, K-Scale Labs, XLeRobot, Lightwheel, Solo Tech, and Fashion Star for sharing your embodied/Physical AI expertise and providing guidance throughout the event for all contestants. We truly appreciate the time, insight, and energy you brought to every discussion. It’s always exciting to keep collaborating with you to grow the developer ecosystem around the LeRobot platform and to bring even more practical tools and resources to the community.

Also, many thanks to Circuit Launch for providing such an amazing and collaborative venue — it was truly the perfect environment for creativity and robotics innovation, with a rich set of tools, machines, and inspiring great ideas coming up every day!

This event wouldn’t have been possible without the dedication of our behind-the-scenes team at Seeed Studio, NVIDIA, and Hugging Face, whose thoughtful planning and coordination made everything run smoothly. We’ve learned a lot from this experience and are excited to bring even more creative collaborations in the future.

Thank you all again for being part of this journey — we look forward to welcoming even more developers into the community and empowering it with fresh and crazy insights! Happy hacking, we’ll see you very soon next year.🤗

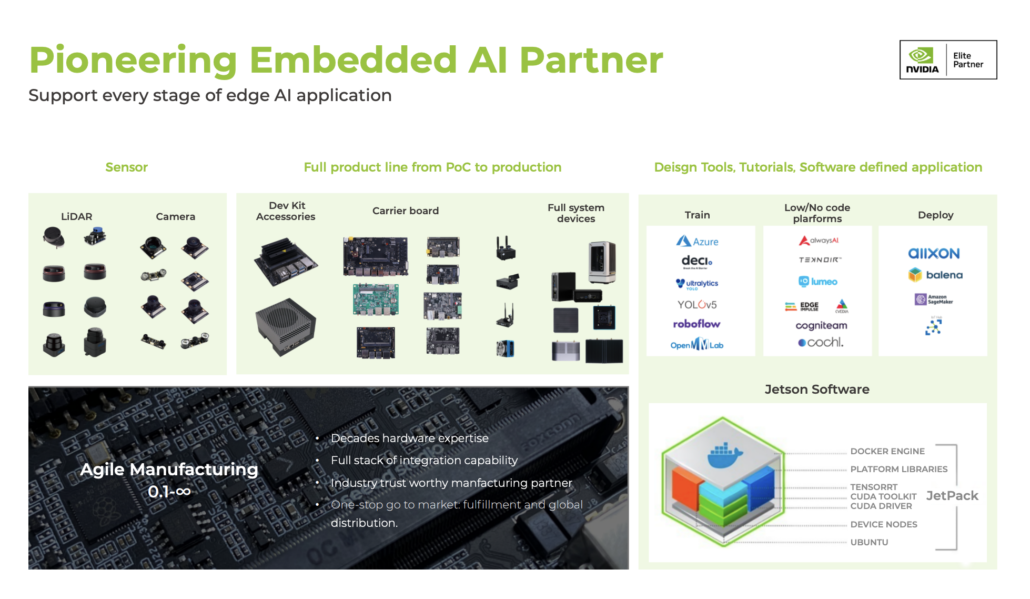

Seeed: NVIDIA Jetson Ecosystem Partner

Seeed is an Elite partner for edge AI in the NVIDIA Partner Network. Explore more carrier boards, full system devices, customization services, use cases, and developer tools on Seeed’s NVIDIA Jetson ecosystem page.

Join the forefront of AI innovation with us! Harness the power of cutting-edge hardware and technology to revolutionize the deployment of machine learning in the real world across industries. Be a part of our mission to provide developers and enterprises with the best ML solutions available. Check out our successful case study catalog to discover more edge AI possibilities!

Take the first step and send us an email at edgeai@seeed.cc to become a part of this exciting journey!

Download our latest Jetson Catalog to find one option that suits you well. If you can’t find the off-the-shelf Jetson hardware solution for your needs, please check out our customization services, and submit a new product inquiry to us at odm@seeed.cc for evaluation.